Ceph: placement groups

Quick overview about placement groups within Ceph.

Placement groups provide a means of controlling the level of replication declustering.

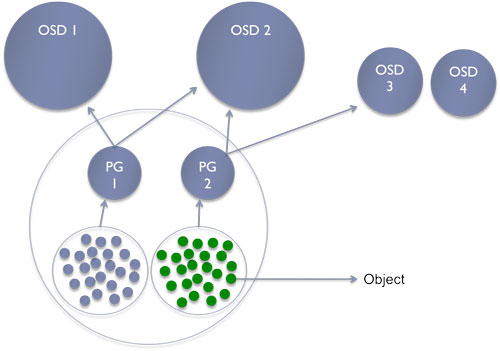

The big picture:

Roughly:

- Pool contains objects

- Pg: placement group contains objects within a pool

- One object belongs to only one pg

- Pg belongs to multiple OSDs

Placement groups offer better balance inside your cluster. I don’t need to remember you that everything is build on top of RADOS in Ceph. So basically let’s analyse, how objects are stored:

First create a dedicated pool:

$ rados mkpool test |

List of my current pools:

$ rados lspools |

In Ceph, each pool is numbered incrementally. First pool has 1 as ID and so on. I have 4 pools, this ID of my latest pool is 4. This can be confirmed by this command:

$ ceph osd lspools |

Fullfil your pool a bit with an object:

$ dd if=/dev/zero of=my-object bs=10M count=1 |

Where is my object?

$ ceph osd map test my-object |

This command returns useful information like:

- my object

my-objectbelongs to the pg number 4 - this object is currently stored into the OSD 8 and 0

You can check the content of the OSD filesystem:

$ ls -lh /srv/ceph/osd8/current/4.0_head/ |

Without any surprise, the directory contains the same information returned by the command above.

A pg overview, I only get the info the test pool:

$ ceph pg dump | egrep -v '^(0\.|1\.|2\.|3\.)' | egrep -v '(^pool\ (0|1|2|3))' | column -t |

You can see our fresh object in line 12 and you also notice that my pool has a replica count of 2, this can be confirmed via:

$ ceph osd dump | egrep ^pool\ 4 |

If you want to go further you can also retrieve the pg statistics:

$ ceph pg 4.0 query |

K Visit those pages for further reading:

I hope this article gave a good comprehension of how ceph store the data :-). Some of you could think: how could you make an article about data placement without talking about CRUSH. I will simply answer: that’s will be part of a future article.

Comments