Make your RBD fly with flashcache

In this article, I will introduce the Block device cacher concept and the different solutions available. I will also (because it’s the title of the article) explain how to increase the performance of an RBD device with the help of flashcache in a Ceph cluster.

I. Solutions available

I.1. Flashcache

Flashcache is a block cache for Linux, built as a kernel module, using the Device Mapper. Flashcache supports writeback, writethrough and writearound caching modes.

Pros:

- Running in production at Facebook and I assume at some other places

- Built upon the device mapper

Cons:

- Developement rate, there are around 20 commit for the last 52 weeks. I put this in the pros section, but this could also mean that Flashcache is fairly stable and simply needs to be maintain, not actively.

- You can’t attach more than one disk… Well the trick is to isolated with partitions. Basically segment your SSD with N partition where each partitions are dedicated to a device.

K Useful links:

I.2. dm-cache

Dm-cache is a generic block-level disk cache for storage networking. It is built upon the Linux device-mapper, a generic block device virtualization infrastructure. It can be transparently plugged into a client of any storage system, including SAN, iSCSI and AoE, and supports dynamic customization for policy-guided optimizations.

Pros:

- Running in production at CloudVPS and provides scalable virtual machine storage

- Built upon the device mapper

Cons:

- Only supports writethrough cache mode

- Same conclusion as Flashcache, there are around 35 commits over the past 52 weeks…

K Useful links:

I.3. bcache

Bcache is a Linux kernel block layer cache. It allows one or more fast disk drives such as flash-based solid state drives (SSDs) to act as a cache for one or more slower hard disk drives.

Pros:

- Looks well featured

- A single cache device can be used to cache an arbitrary number of backing devices

- Claims to have a good mechanism to prevent data loss in writeback mode

- Good algorithm that flushes data on the underneath block device in case of the cache device dies

For more see, the features list on the official website.

Cons:

- Compile your own kernel…

- Not built upon the device mapper

- Commit rate pretty low as well

K Useful links:

At the end, bcache remains the most featured but as far I’m concerned I don’t want to run custom compiled kernel in production. Dm-cache looks nice as well but the patch only works with 3.0 kernel. Then flashcache remains and seems to be a good candidate since it workds on Kernel 3.X.

II. Begin with flashcache

II.1. Installation

Even if the documentation specified 2.6.X kernel (tested kernel), it seems that flashcache is compatible with recent kernels. Below the kernels I tested:

- 3.2.0-23-generic

- 3.5.0-10-generic

You will notice that it’s really easy to install flashcache. As usual, start by cloning the repository and eventually compile flashcache:

$ sudo git clone https://github.com/facebook/flashcache.git |

Load the kernel module:

$ sudo modprobe flashcache |

II.2. Your first flashcache device

II.2.1. RBD preparations

Simply create a pool called flashcache and then an image inside it:

$ rados mkpool flashcache |

Map this image on your host machine and put a filesystem on it:

$ rbd -p flashcache map fc |

Your RBD device is now ready to use.

II.2.1. Flashcache device

Let’s assume for the sake of simplicity that our SSD is detected as /dev/sdb and that a partition has been created. Don’t forget you must partition it according to the number of RBD device you want to cache (if you want so).

$ sudo flashcache_create -p back -s 15G -b 4k rbd_fc /dev/sdb1 /dev/rbd/flashcache/fc |

Options explained:

-p: operate in writeback mode, which means that we cache both write and read requests-s: set the size of the cache, it should be the same as the size of your partition-b: set the block size to 4Krbd_fc: name of your flashcache device

The device has been created in /dev/mapper/.

Replacement policy is either FIFO or LRU within a cache set. The default is FIFO but policy can be switched at any point at run time via a sysctl (see the configuration and tuning section). As far I’m concerned I prefer to use LRU (Last Recently Used) list, which I found it more efficient on my environment (web applications). This can be modified on fly like so:

$ sudo sysctl -w dev.flashcache.rbd_fc.reclaim_policy=1 |

Now you can start to use it:

$ sudo mount /dev/mapper/rbd_fc /mnt |

TADA ! :D

R Note: flashcache puts a ‘lock‘ on the underneath block device, so basically it’s not possible to use our RBD device while flashcache is operating.

II.2.2. Quick admin guide

II.2.2.1. Stop the flashcache device

Here is how to stop the flashcache device:

$ sudo dmsetup remove rbd_fc |

This will sync all the dirty blocks on our RBD device.

II.2.2.2. Destroy the flashcache device

Completely destroy the flashcache device and his content with it.

$ sudo flashcache_destroy /dev/sdb1 |

II.2.2.3. Load the flashcache device

Load the flashcache device:

$ sudo flashcache_load /dev/sdb1 rbd_fc |

II.2.2.4. Check the device status

Some useful info:

$ ls /proc/flashcache/loop0+bencha/ |

II.3. Don’t have any SSD? Wanna play with flashcache?

If you only want to evaluate how it works, you don’t really need to buy disk (SSD or whatever). For this we basically need a block device, we are going to use loop device for that. The loop device will act as a block device for flashcache. Of course that won’t bring any performance, it’s just for testing purpose.

$ dd if=/dev/zero of=fc bs=2G count=1 |

Check for a free loop device and then attach your nearly created file to it.

$ losetup -f |

There is no use to put a filesystem on it, since we use the one from the RBD device.

R Note: using partitions with loop device is a little bit tricky. Create one or more partitions is not a problem, but putting a filesystem and mounting it is. We have to play with offset.

$ sudo flashcache_create -p back -s 512m -b 4k loop_rbd_bencha /dev/loop0 /dev/rbd/bench/bencha |

Check your device status:

$ sudo dmsetup ls |

Look at the device table:

$ sudo dmsetup table loop_rbd_bencha |

III. Benchmarks

For heavy and intensive benchmarks I used fio with the following configuration file:

[global]

bs=4k

ioengine=libaio

iodepth=4

size=10g

direct=1

runtime=60

directory=/srv/fc/1/fio

filename=rbd.test.file

[seq-read]

rw=read

stonewall

[rand-read]

rw=randread

stonewall

[seq-write]

rw=write

stonewall

[rand-write]

rw=randwrite

stonewall

III.1. Raw perf for the SSD

First I evaluated the raw performance of my SSD (an OCZ Vertex 4 256GB):

III.1.1. Basic DD

Huge block size test:

$ for i in `seq 4`; do dd if=/dev/zero of=/mnt/bench$i bs=1G count=1 oflag=direct ; done |

III.1.2. FIO

fio bench:

$ sudo fio fio.fio |

III.2. Raw perf for the rbd device

Then evaluate the raw performance of the RBD device.

III.2.1 Basic DD

Huge block size test:

$ for i in `seq 4`; do dd if=/dev/zero of=/srv/bench$i bs=1G count=1 oflag=direct ; done |

III.2.2. Fio

Fio bench:

$ sudo fio fio.fio |

III.3. Flashcache performance

III.3.1. Basic DD

Huge block size test:

$ for i in `seq 4`; do dd if=/dev/zero of=/srv/bench-flash$i bs=1G count=1 oflag=direct ; done |

III.3.2. Fio

Fio benchs:

$ sudo fio fio.fio |

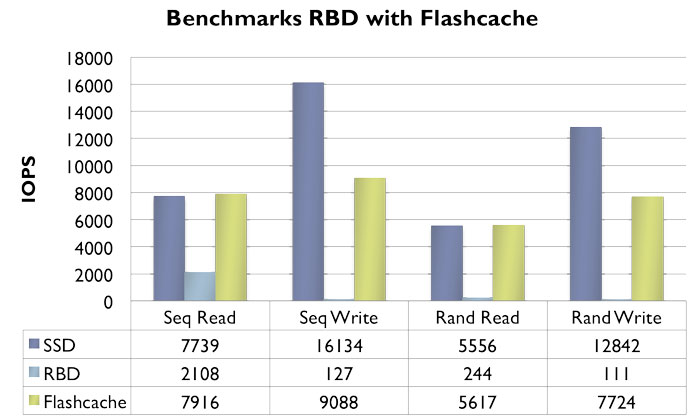

III.4. Chart results

A little sum up in a more readable format:

Random benchmarks found on bcache IRC:

IV. High availability corner

Here I’m about to introduce the integration of Flashcache on the HA stack with Pacemaker.

R Note: if you use writeback mode you must use DRBD to replicate the blocks of your Flashcache device, to ensure consistency on the block level. With Writethrough and Writearound it’s not necessary because every write requests go to the underneath block device directly. I won’t detail the DRBD setup since you can find it on several articles in my website, use the research button ;-).

All the prerequisites about RBD and Paceamker can be found on my previous article. For the flashcache RA, it has been installed during the git clone. I would like to thank you Florian Haas from Hastexo for this resource agent.

First configure the rbd primitive:

$ sudo crm configure primitive p_rbd ocf:ceph:rbd.in \ |

And Flashcache:

$ sudo crm configure primitive p_flashcache ocf:flashcache:flashcache \ |

Then the Filesystem:

$ sudo crm configure primitive p_fs ocf:heartbeat:Filesystem \ |

Eventually create a group for all these resources:

$ sudo crm configure group g_disk p_rbd p_flashcache p_fs |

You should see something like this:

============

Last updated: Thu Nov 15 01:10:54 2012

Last change: Thu Nov 15 01:06:16 2012 via cibadmin on ha-01

Stack: openais

Current DC: ha-01 - partition with quorum

Version: 1.1.6-9971ebba4494012a93c03b40a2c58ec0eb60f50c

2 Nodes configured, 2 expected votes

3 Resources configured.

============

Online: [ ha-01 ha-02 ]

Resource Group: g_disk

p_rbd (ocf::ceph:rbd.in): Started ha-01

p_flashcache (ocf::flashcache:flashcache): Started ha-01

p_fs (ocf::heartbeat:Filesystem): Started ha-01

Here we used the writethrough mode for our flashcache device, so we don’t need to replicate the content of our flashcache device.

I truly believe in the potential of bcache, I will probably consider it when the implementation will be in the device mapper (and easier, no funcky Kernel compilation) and why not part of the kernel mainline (it’s not for today…). For the moment, I think Flashcache is a really good solution that can suit most of our needs. As always feel free to comment, critic and ask questions on the comment section below ;-)

Comments