Ceph: manage storage zones with CRUSH

This article introduces a simple use case for storage providers. For some reasons some customers would like to pay more for a fast storage solution and other would prefer to pay less for a reasonnable storage solution.

I. Use case

Roughly say your infrastructure could be based on several type of servers:

- storage nodes full of SSDs disks

- storage nodes full of SAS disks

- storage nodes full of SATA disks

Such handy mecanism is possible with the help of the CRUSH Map.

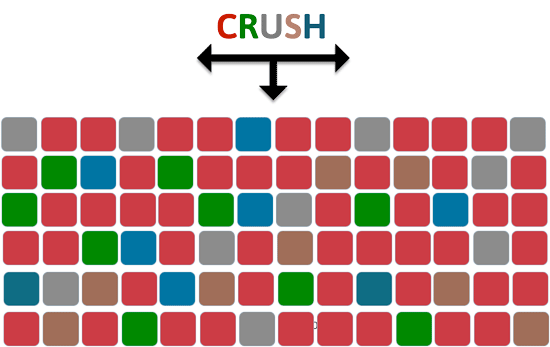

II. A bit about CRUSH

CRUSH stands for Controlled Replication Under Scalable Hashing:

- Pseudo-random placement algorithm

- Fast calculation, no lookup Repeatable, deterministic

- Ensures even distribution

- Stable mapping

- Limited data migration

- Rule-based configuration, rule determines data placement

- Infrastructure topology aware, the map knows the structure of your infra (nodes, racks, row, datacenter)

- Allows weighting, every OSD has a weight

For more details check the Ceph Official documentation.

III. Setup

What are we going to do?

- Retrieve the current CRUSH Map

- Decompile the CRUSH Map

- Edit it. We will add 2 buckets and 2 rulesets

- Recompile the new CRUSH Map.

- Re-inject the new CRUSH Map.

III.1. Begin

Grab your current CRUSH map:

$ ceph osd getcrushmap -o ma-crush-map |

For the sake of simplicity, let’s assume that you have 4 OSDs:

- 2 of them are SAS disks

- 2 of them are SSD enterprise

And here is the OSD tree:

$ ceph osd tree |

III.2. Default crush map

Edit your CRUSH map:

# begin crush map

# devices

device 0 osd.0

device 1 osd.1

device 2 osd.2

device 3 osd.3

# types

type 0 osd

type 1 host

type 2 rack

type 3 row

type 4 room

type 5 datacenter

type 6 pool

# buckets

host ceph-01 {

id -2 # do not change unnecessarily

# weight 3.000

alg straw

hash 0 # rjenkins1

item osd.0 weight 1.000

item osd.1 weight 1.000

}

host ceph-02 {

id -4 # do not change unnecessarily

# weight 3.000

alg straw

hash 0 # rjenkins1

item osd.2 weight 1.000

item osd.3 weight 1.000

}

rack le-rack {

id -3 # do not change unnecessarily

# weight 12.000

alg straw

hash 0 # rjenkins1

item ceph-01 weight 2.000

item ceph-02 weight 2.000

}

pool default {

id -1 # do not change unnecessarily

# weight 12.000

alg straw

hash 0 # rjenkins1

item le-rack weight 4.000

}

# rules

rule data {

ruleset 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule metadata {

ruleset 1

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule rbd {

ruleset 2

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map

III.3. Add buckets and rules

Now we have to add 2 new specific rules:

- one for the SSD pool

- one for the SAS pool

III.3.1. SSD Pool

Add a bucket for the pool SSD:

pool ssd {

id -5 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.0 weight 1.000

item osd.1 weight 1.000

}

Add a rule for the bucket nearly created:

rule ssd {

ruleset 3

type replicated

min_size 1

max_size 10

step take ssd

step choose firstn 0 type osd

step emit

}

III.3.1. SAS Pool

Add a bucket for the pool SAS:

pool sas {

id -6 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.2 weight 1.000

item osd.3 weight 1.000

}

Add a rule for the bucket nearly created:

rule sas {

ruleset 4

type replicated

min_size 1

max_size 10

step take sas

step choose firstn 0 type osd

step emit

}

Eventually recompile and inject the new CRUSH map:

$ crushtool -c ma-crush-map.txt -o ma-nouvelle-crush-map |

III.3. Create and configure the pools

Create your 2 new pools:

$ rados mkpool ssd |

Set the rule set to the pool:

ceph osd pool set ssd crush_ruleset 3 |

Check that the changes have been applied successfully:

$ ceph osd dump | grep -E 'ssd|sas' |

III.4. Test it

Just create some random files and put them into your object store:

$ dd if=/dev/zero of=ssd.pool bs=1M count=512 conv=fsync |

Where are pg active?

$ ceph osd map ssd ssd.pool.object |

CRUSH Rules! As you can see from this article CRUSH allows you to perform amazing things. The CRUSH Map could be very complex, but it brings a lot of flexibility! Happy CRUSH Mapping ;-)

Comments