Ceph and Cinder multi-backend

Grizzly brought the multi-backend functionality to cinder and tons of new drivers. The main purpose of this article is to demonstrate how we can take advantage of the tiering capability of Ceph.

Grizzly brought the multi-backend functionality to cinder and tons of new drivers. The main purpose of this article is to demonstrate how we can take advantage of the tiering capability of Ceph.

Materials to start playing with Ceph. This Vagrant box contains a all-in-one Ceph installation.

Sometimes it’s just funny to experiment the theory, just to notice “oh well it works as expected”. This is why today I’d like to share some experiments with 2 really specific flags: noout and nodown. Behaviors describe in the article are well known because of the design of Ceph, so don’t yell at me: ‘Tell us something we don’t know!’, simply see this article a set of exercises that demonstrate some Ceph internal functions :-).

Quite recently François Charlier and I worked together on the Puppet modules for Ceph on behalf of our employer eNovance. In fact, François started to work on them last summer, back then he achieved the Monitor manifests. So basically, we worked on the OSD manifest. Modules are in pretty good shape thus we thought it was important to communicate to the community. That’s enough talk, let’s dive into these modules and explain what do they do. See below what’s available:

-f -d agcount=<cpu-core-number> -l size=1024m -n size=64k and finally mounted with: rw,noatime,inode64. Then it will mount all of them and append the appropriate lines in the fstab file of each storage node. Finally the OSDs will be added into Ceph.All the necessary materials (sources and how-to) are publicly available (and for free) under AGPL license on eNovance’s Github. Those manifests do the job quite nicely, although we still need to work on MDS (90% done, just need a validation), RGW (0% done) and a more flexible implementation (authentication and filesystem support). Obviously comments, constructive critics and feedback are more then welcome. Thus don’t hesitate to drop an email to either François ([email protected]) or I ([email protected]) if you have further questions.

Short short update.

A Placement Group (PG) aggregates a series of objects into a group, and maps the group to a series of OSDs. A common mistake while creating a pool is to use the rados command which by default creates a pool of 8 PGs. Sometime you don’t properly know how to set this value thus you use the ceph command but put an extremely high value for it. Both case are bad and could lead to some unfortunate situations. In this article, I will explore some methods to work around this major problem.

The title of the article is a bit wrong, but it’s certainly the easiest to understand :-).

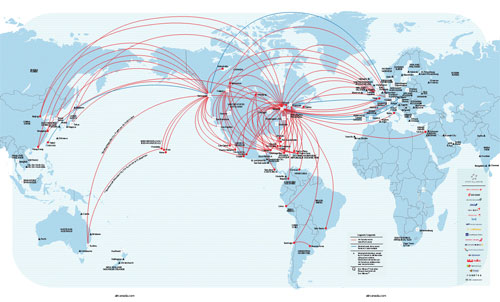

It’s fair to say that the geo-replication is one of the most requested feature by the community. This article is draft, a PoC about Ceph geo-replication.

Disclaimer: yes this setup is tricky and I don’t guarantee that this will work for you.

How to use a memory profiler to track memory usage of Ceph daemons!

A lot of new features came with the version 0.55 of Ceph, one of them is that CephX authentication is enable by default. If you run v0.48 Argonaut without CephX and want to update to the latest Bobtail, you might run through some problems if you don’t edit your configuration file.