OpenStack Glance NFS and Compute local direct fetch

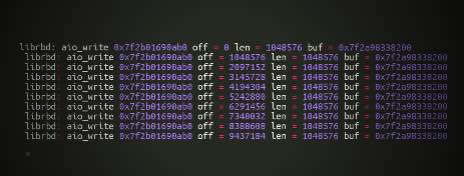

This feature has been around for quite a while now, if I remember correctly it was introduced in the Grizzly release. However, I never really got the chance to play around with it. Let’s assume that you use NFS to store Glance images, we know that the default booting mechanism implies to fetch the instance image from Glance to the Nova compute. This is basically streaming the image which involves network throughput and makes the boot process longer. OpenStack Nova can be configured to directly access Glance images from a local filesystem path. This is ideal for our NFS scenario.