Grizzly Nova: what's new in the API CLI?

Quick Nova API CLI updates.

Quick Nova API CLI updates.

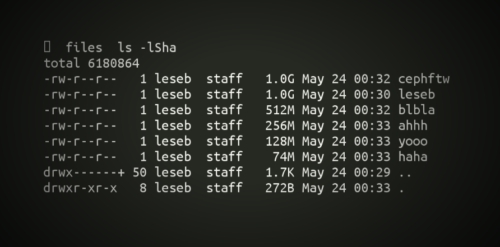

Archiving deleted rows made easy by Grizzly.

How-to quickly deploy a MDS server.

The title of the article is not that explicit, actually I had trouble to find a proper one. Thus let me clarify a bit. Here is the context I was wondering if Glance was capable of converting images within its store. The quick answer is no, but I think such feature is worth to be implemented. Glance could be able to convert a QCOW2 image to a RAW format. Usually if you already have an image within let’s say a Ceph cluster (RBD), you have to download the image (since you probably don’t have the source image file anymore), then manually convert it with qemu-img (QCOW2 –> RAW) and eventually import it into Glance. Enough talk about this, I’ll address this in a future article. For now let’s stick to the first matter. Imagine that you have a KVM cluster backed by a Ceph Cluster and your CTO wants you to migrate the whole environment to OpenStack because it’s trendy (joking, OpenStack just rocks!). You’re not going to backup all your images and then build a new cluster or something like that, you might want OpenStack (Glance) to be aware of your Ceph cluster. Generally speaking you just have to connect Glance to one of your image pool. After this, the only thing to do is to create (it’s more registering the images ID and metadata than creating a new image) into Glance. No worries here’s the explanation. Longest introduction ever.

Grizzly brought the multi-backend functionality to cinder and tons of new drivers. The main purpose of this article is to demonstrate how we can take advantage of the tiering capability of Ceph.

Materials to start playing with Ceph. This Vagrant box contains a all-in-one Ceph installation.

Sometimes it’s just funny to experiment the theory, just to notice “oh well it works as expected”. This is why today I’d like to share some experiments with 2 really specific flags: noout and nodown. Behaviors describe in the article are well known because of the design of Ceph, so don’t yell at me: ‘Tell us something we don’t know!’, simply see this article a set of exercises that demonstrate some Ceph internal functions :-).

Quite recently François Charlier and I worked together on the Puppet modules for Ceph on behalf of our employer eNovance. In fact, François started to work on them last summer, back then he achieved the Monitor manifests. So basically, we worked on the OSD manifest. Modules are in pretty good shape thus we thought it was important to communicate to the community. That’s enough talk, let’s dive into these modules and explain what do they do. See below what’s available:

-f -d agcount=<cpu-core-number> -l size=1024m -n size=64k and finally mounted with: rw,noatime,inode64. Then it will mount all of them and append the appropriate lines in the fstab file of each storage node. Finally the OSDs will be added into Ceph.All the necessary materials (sources and how-to) are publicly available (and for free) under AGPL license on eNovance’s Github. Those manifests do the job quite nicely, although we still need to work on MDS (90% done, just need a validation), RGW (0% done) and a more flexible implementation (authentication and filesystem support). Obviously comments, constructive critics and feedback are more then welcome. Thus don’t hesitate to drop an email to either François ([email protected]) or I ([email protected]) if you have further questions.

Short short update.

A Placement Group (PG) aggregates a series of objects into a group, and maps the group to a series of OSDs. A common mistake while creating a pool is to use the rados command which by default creates a pool of 8 PGs. Sometime you don’t properly know how to set this value thus you use the ceph command but put an extremely high value for it. Both case are bad and could lead to some unfortunate situations. In this article, I will explore some methods to work around this major problem.