Mount a specific pool with CephFS

The title of the article is a bit wrong, but it’s certainly the easiest to understand :-).

The title of the article is a bit wrong, but it’s certainly the easiest to understand :-).

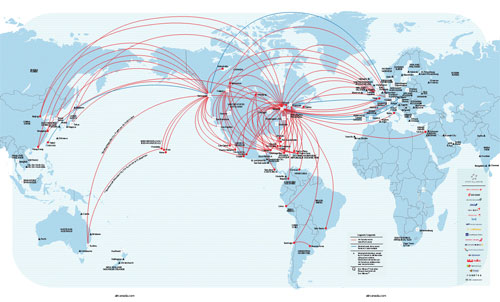

It’s fair to say that the geo-replication is one of the most requested feature by the community. This article is draft, a PoC about Ceph geo-replication.

Disclaimer: yes this setup is tricky and I don’t guarantee that this will work for you.

How to use a memory profiler to track memory usage of Ceph daemons!

A lot of new features came with the version 0.55 of Ceph, one of them is that CephX authentication is enable by default. If you run v0.48 Argonaut without CephX and want to update to the latest Bobtail, you might run through some problems if you don’t edit your configuration file.

Configure logging in Ceph.

Several way to know where your instances run.

Every once in a while you really want to cleanup the token table of the Keystone database. A couple of weeks ago while backuping my cloud controller I noticed that the backup of the Keystone database was longer than the other databases. After that, I checked the size of the dump (compressed) 60MB. Hummm but there is almost nothing in the Keystone database: users, tenants… wait.. could it be TOKENS?!

This article introduces a simple use case for storage providers. For some reasons some customers would like to pay more for a fast storage solution and other would prefer to pay less for a reasonnable storage solution.

I’ve been often asked a couple of time, why CephFS is not ready? Does this statment is still true?

In this article, I will introduce the Block device cacher concept and the different solutions available. I will also (because it’s the title of the article) explain how to increase the performance of an RBD device with the help of flashcache in a Ceph cluster.