The Hammer release brought the support of a new feature for RBD images called object map.

The object map tracks which blocks of the image are actually allocated and where.

This is especially useful for operations on clones like resize, import, export, flattening and size calculation since the client does not need to calculate where each object is located.

The client will just look up on that table.

Read On...

RBD readahead was introduced with Giant.

Read On...

Quick tip.

Simply check the diff between the applied configuration in your ceph.conf and the default values on an OSD.

Read On...

Ceph monitors make use of leveldb to store cluster maps, users and keys.

Since the store is present, Ceph developers thought about exposing this through the monitors interface.

So monitors have a built-in capability that allows you to store blobs of data in a key/value fashion.

This feature has been around for quite some time now (something like 2 years), but haven’t got any particular attention since then.

I even noticed that I never blogged about it :).

Read On...

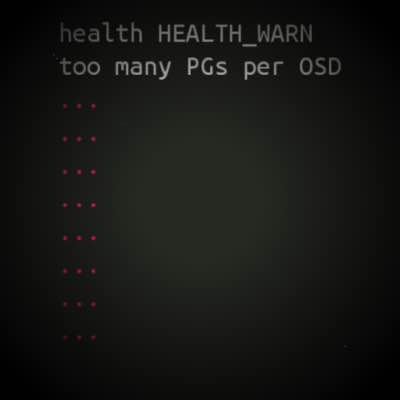

Debugging scrubbing errors can be tricky and you don’t necessary know how to proceed.

Read On...

This is a quick note about Ceph networks, so do not expect anything lengthy here :).

Usually Ceph networks are presented as cluster public and cluster private.

However it is never mentioned that you can use a separate ne

Read On...

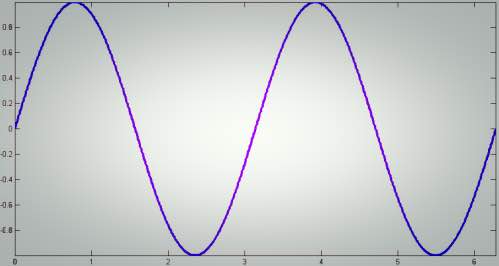

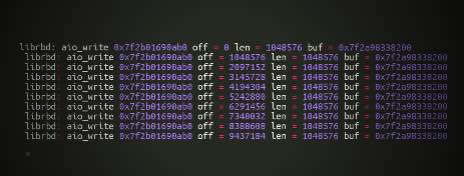

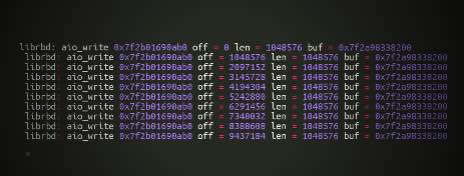

Simple trick to analyse the write patterns applied to your Ceph journal.

Read On...

Recently I improved a playbook that I wrote a couple of months ago regarding Ceph rolling upgrades.

This playbook is part of the Ceph Ansible repository and available as rolling_update.yml

Let’s have a look at it.

Read On...

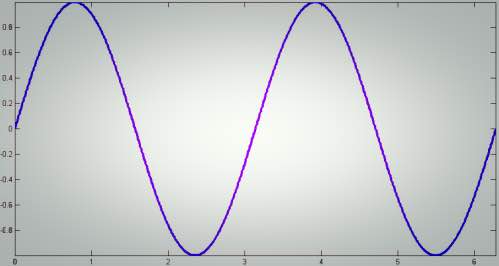

Analyse IO pattern of all your guest machines.

Read On...