See you at the OpenStack Summit

Next week is the OpenStack Summit conference. I will attend and will be giving a talk How to Survive an OpenStack Cloud Meltdown with Ceph.

See you there!

Next week is the OpenStack Summit conference. I will attend and will be giving a talk How to Survive an OpenStack Cloud Meltdown with Ceph.

See you there!

I just figured out that there hasn’t been much coverage on that functionality, even though we presented it last year at the OpenStack Summit.

Since Ceph Jewel, we have the RBD mirroring functionality and people have been starting using it for multi-site and disaster recovery use cases.

The tool is not perfect but is rock solid, expect many enhancements in the future release such as support for multiple peer and daemons.

From a pure OpenStack perspective, to enable this feature we don’t really want to add any code into Glance Store.

The reason is simple, glance’s store code looks up for specific Ceph features into the Ceph configuration file itself.

So there is no point of adding a new configuration flag into Glance that says something like enable_image_journaling.

The operator will only have to configure Ceph, that’s it.

Yes people, I’m still alive :). As you might noticed, I’ve been having a hard time to keep up the pace with blogging. It’s mainly due to me traveling a lot these days and preparing conferences. It’s a really busy end of the year for me :).

Fortunately, I’m still finding the time to work on some new features to projects I like. As you might know, I’ve been busy working on ceph-ansible and ceph-docker, trying conciliate both and making sure they work well together. In ceph-docker, we have an interesting container image, that I already presented here. I was recently thinking we could use it to simplify the Ceph bootstrapping process in DevStack. The patch I recently merge doesn’t get ride of the “old” way to bootstrap, the path is just a new addition, a new deployment method.

In practice, this doesn’t change anything for me, but at some point it allows us to validate that a containerized Ceph doesn’t have any problem and bring the same functionality as a non-containerized Ceph. Without further ado, let’s jump into this!

With the help of two colleagues, I’ve been busy writing this little book about storage in OpenStack. The book is quite general but gives some good perspectives on storage challenges you will face in OpenStack. It explains why traditional storage solutions will not work and how Ceph addresses these issues. Ultimately describing why Ceph is the best solution for your OpenStack cloud.

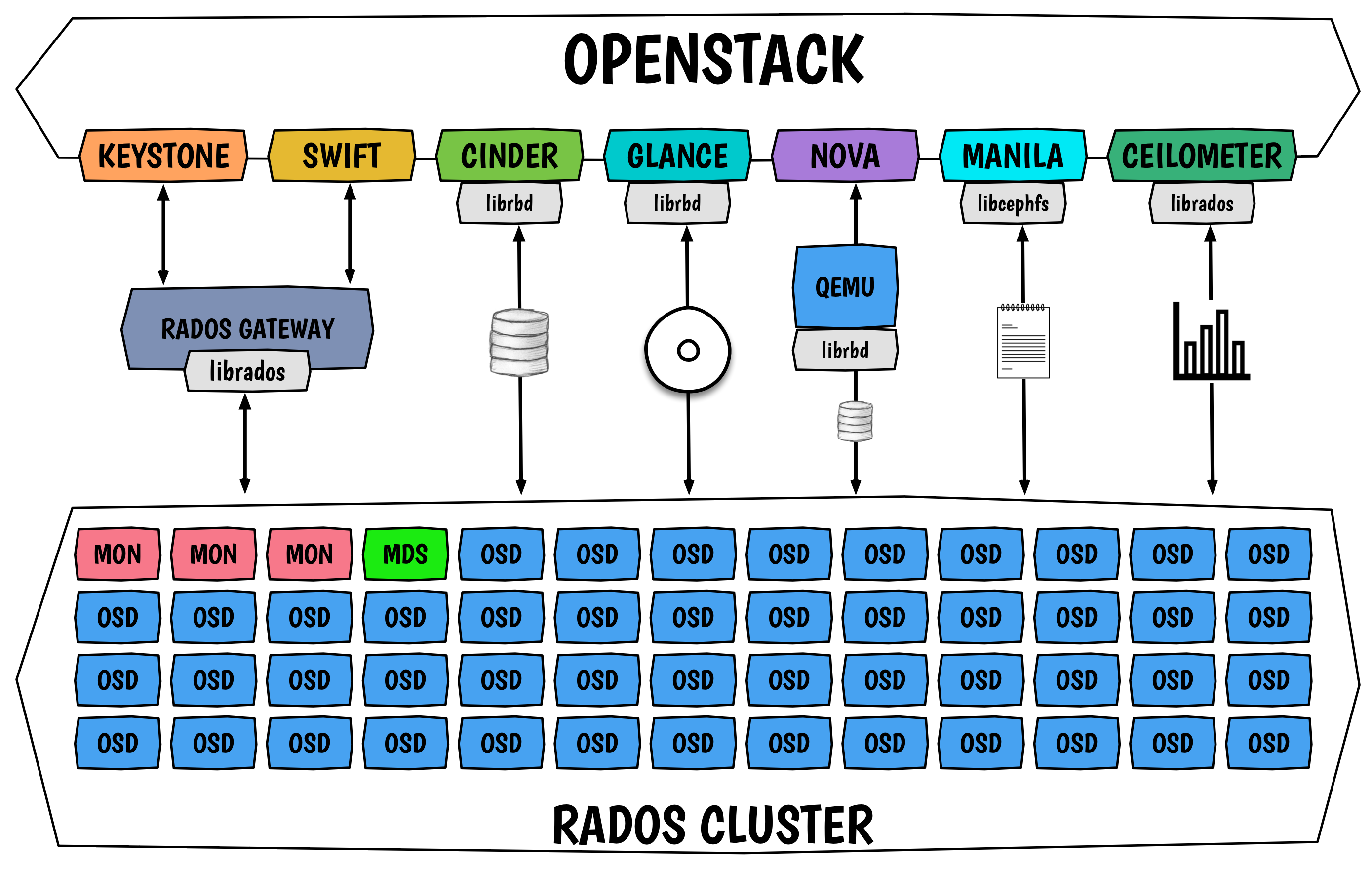

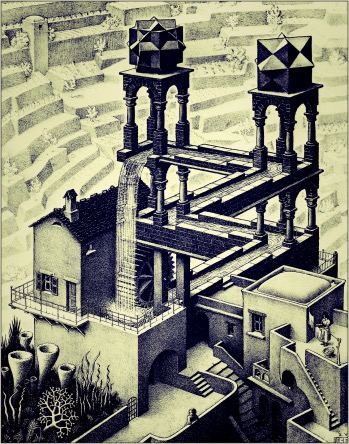

Picture of our galaxy :). This picture describes the state of the integration of Ceph into OpenStack.

OpenStack Mitaka brought the support of a new feature. This feature is a follow-up of the Nova discard implementation. Now it is possible to configure Cinder per backend.

The summit is almost there and it is time to vote for the presentation you want to see :).

I have been waiting for this feature for more than a year and it is almost there! This likely brings us one step toward diskless compute nodes. This “under the hood” article will explain the mechanisms in place to perform fast and efficient Nova instance snapshots directly in Ceph.

Configure a Nova hypervisor with more than one backend to store the instance’s root ephemeral disks.